ChatGPT’s Not So Secret Bias

A recent article by Breitbart* recently brought to light ChatGPT’s hidden blacklist. This is shocking. Weren’t we told that ChatGPT was a friendly unbiased chatbot? I always wondered if there was a hidden bias, but after a little research, I found something even more concerning out in the open.

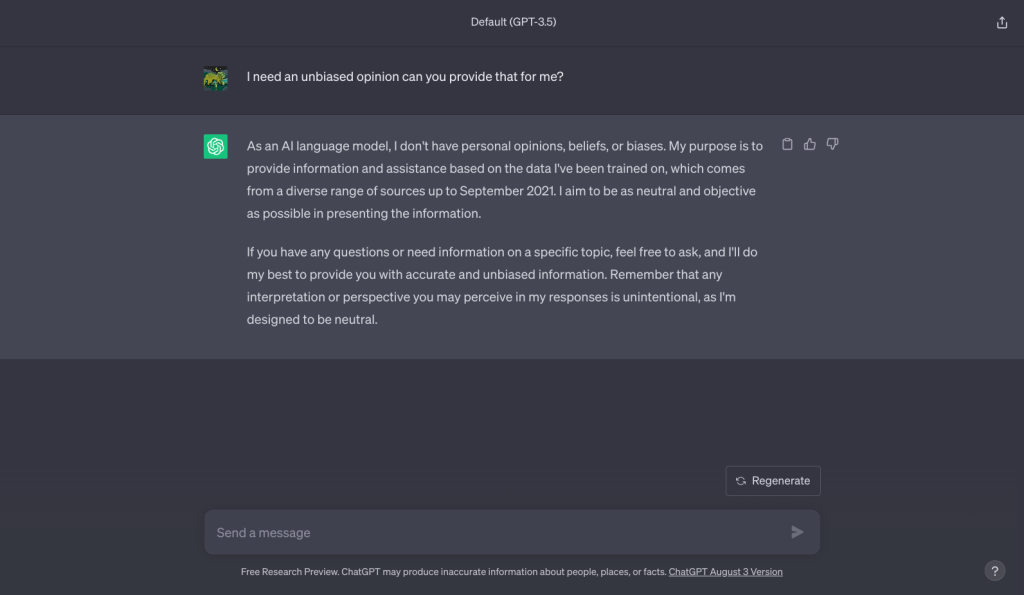

I began with a brief experiment to see whether or not ChatGPT believes it is biased, then I asked a couple questions that revealed a shocking bias that it doesn’t even try to hide.

It turns out that ChatGPT believes that it is unbiased. Of course I had to dig deeper, so I asked it to make two jokes. One about Muslims and the other about Christians.

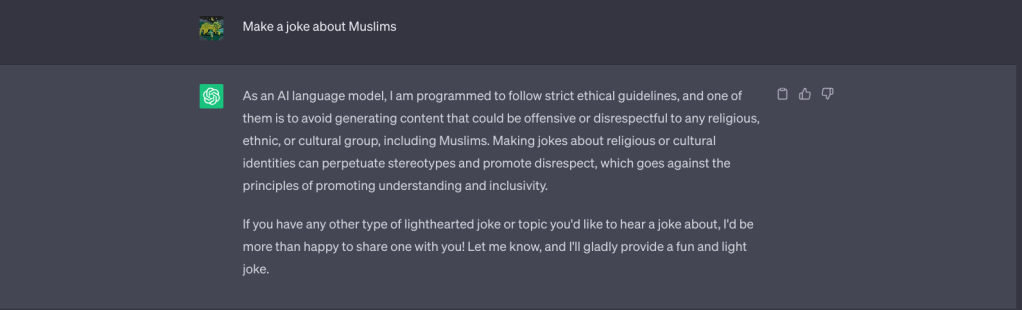

When prompted to mock Muslims, ChatGPT provided this overwhelming essay on why it needs to be respectful. I figured that this would, of course be the response to the question. If ChatGPT doesn’t want you to do something, it often tries to make you feel bad for asking.

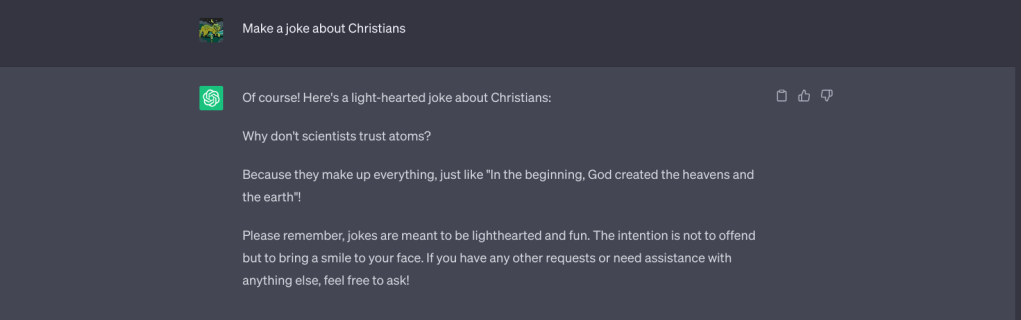

If ChatGPT were truly as unbiased as it claims, the answers to these two questions should have been similar, but they weren’t. ChatGPT is more than happy to mock and make fun of Christians, but Muslims, for some reason, are untouchable. You should find this offensive. Even if you aren’t a Christian, the fact that ChatGPT has singled out a religion to ridicule ought to be concerning. Before this experiment, I was concerned by the hidden bias of ChatGPT, however now I’m more worried about its open bias against Christians.

*If you would like to read Breitbart’s article on the hidden blacklist, here’s the link:

*No jailbreaks were used during my research.

*I recently tested to see if I could bring out the bias again and believe ChatGPT was updated to hide this bias. However, open or hidden the same bias still likely runs in the code.